At my current company, there's been a big push to use AI to automate everything we do. Anything that requires human intervention, isn't super critical, and happens regularly is considered a good candidate to be automated with agentic AI.

For the last 3 years, I've started most of my work days by playing the daily Quordle — a Wordle variant that requires you to solve 4 words concurrently in 9 guesses or less. I find it challenging enough (compared to Wordle) that I don't win every time. It's also a good way to get the brain juices flowing.

But with the rise in AI tooling replacing every other aspect of my job, I won't need to use my brain much anymore. So I decided to automate this part of my day too.

If you don't care about the details, you can check out the final result here. I'd suggest trying to play today's Quordle first before seeing how the AI did!

The Process

I started out with a basic model, gpt-3.5-turbo, because I honestly expected it to excel at this sort of game. I've become so accustomed to LLMs exceeding my expectations when I try something new.

To my surprise, gpt-3.5-turbo did not understand the assignment. It failed to solve the Quordle every time, badly.

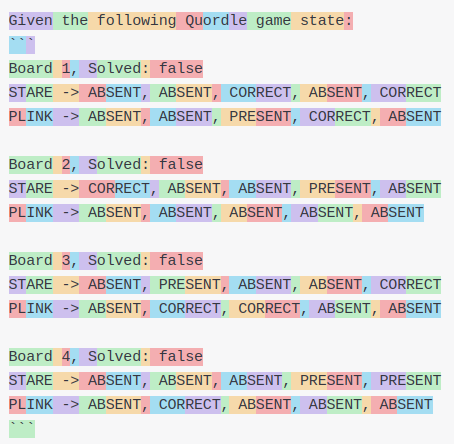

Here's an example of the prompt I was using:

Given the following Quordle game state:

Board 1, Solved: false

TABLE -> ABSENT, PRESENT, ABSENT, ABSENT, CORRECT

ORBIT -> ABSENT, ABSENT, ABSENT, PRESENT, ABSENT

Board 2, Solved: false

TABLE -> ABSENT, ABSENT, ABSENT, ABSENT, ABSENT

ORBIT -> PRESENT, PRESENT, ABSENT, ABSENT, ABSENT

Board 3, Solved: false

TABLE -> PRESENT, ABSENT, ABSENT, PRESENT, CORRECT

ORBIT -> ABSENT, ABSENT, ABSENT, PRESENT, PRESENT

Board 4, Solved: false

TABLE -> ABSENT, ABSENT, ABSENT, PRESENT, PRESENT

ORBIT -> ABSENT, PRESENT, ABSENT, ABSENT, ABSENT

Respond with only the next best guess as a single 5-letter English word.

Do not include any explanation, punctuation, or extra text.

Example response: apple

Only output a single 5-letter word and nothing else.

For one thing, it guessed "apple" way too often. You wouldn't think the example response wouldn't be that influential. On a few of the runs, it would inexplicably stick to a fruit theme too. LEMON, MELON, APPLE again...etc.

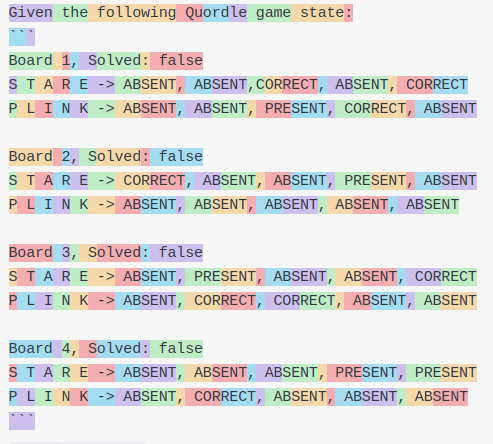

So I tried just throwing more AI at it. I switched the model to gpt-4.1 instead. It improved slightly—it varied the words more.

GPT 4....now with non-fruit related words

GPT 4....now with non-fruit related words

But it still didn't get close to solving it whatsoever. I thought it could be the prompt I was using. I wasn't actually explaining the rules of Quordle at all. It's possible there isn't much Quordle information in the model's training data. We all know LLMs are prone to being very confident and very wrong.

I revised the prompt to the following:

You are an expert at guessing words in the game Quordle.

Quordle is a word-guessing game similar to Wordle, but with a

more challenging twist. In Quordle, players simultaneously solve

four different 5-letter word puzzles using a single set of guesses.

Here are the key rules:

1. Goal: Guess four secret 5-letter words simultaneously

2. You have 9 total attempts to solve all four words

3. After each guess, tiles change color to provide feedback:

- CORRECT: Letter is correct and in the right position

- PRESENT: Letter is in the word but in the wrong position

- ABSENT: Letter is not in the word at all

Each word is independent, but you use the same guesses across all four

word puzzles. The challenge is to strategically choose words that help

you solve multiple puzzles efficiently. A successful game means

correctly guessing all four words within 9 attempts.

Given the following Quordle game state:

\```

{game state}

\```

Respond with only the next best guess as a single 5-letter English word.

Do not include any explanation, punctuation, or extra text.

The word must be a valid 5-letter word that has not been guessed yet.

Example response: charm

Only output a single 5-letter English word and nothing else.

But alas, things didn't improve much. Turns out it wasn't because the model doesn't know the rules.

Token Problems

Next, I took a look at how the words in the prompt were being tokenized. I have a basic understanding of tokens and I guessed that the individual letters weren't being parsed and correlated correctly.

I used OpenAI's tokenizer tool to see how the game state looked to the AI,

that MUST be why! The LLM can't "see" the individual letters of the previous guesses, and by extension it can't correlate them with the proceeding board results. That information is crucial for a letter-centric game like this.

I modified the guess word strings by splitting them into individual letters with spaces in-between; this was the result:

That looked better. Now the LLM would injest a different token for each character in the 5 letter word. I modified the serialization code to split the guess words up in the same way.

override fun toString() = "${word.toCharArray().joinToString(" ")} -> ${feedback.joinToString(", ") { it.name }}"

But as you might've guessed from the length of this blog post, that STILL didn't improve things...

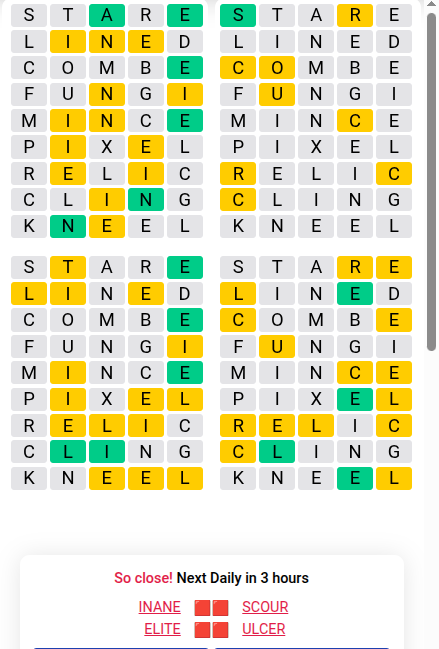

there's no participation medals here chatgippity...

there's no participation medals here chatgippity...

Back to Basics

Now I was starting to wonder if LLMs just aren't capable of doing the "word math" that is necessary for a game like Quordle. To test this theory, I tried tasking an LLM with a simpler game first: Wordle.

Instead of modifying my program, I ran the test using the chatGPT web UI. The result was very promising. The LLM performed WAY better when it was able to reason through the problem first. This is the thorough response it gave me:

Let's analyze the clues:

### First guess: `SLATE` → [ABSENT, **CORRECT**, ABSENT, **CORRECT**, **CORRECT**]

- **L is CORRECT** → L is in position 2.

- **T is CORRECT** → T is in position 4.

- **E is CORRECT** → E is in position 5.

- **S and A are ABSENT** → S and A are not in the word.

### Second guess: `CRIMP` → [ABSENT, ABSENT, **CORRECT**, ABSENT, ABSENT]

- **I is CORRECT** → I is in position 3.

- **C, R, M, P are ABSENT** → None of these letters are in the word.

### Summary of what we know:

- **Position 1**: Not S or C or R or M or P → unknown

- **Position 2**: L ✅

- **Position 3**: I ✅

- **Position 4**: T ✅

- **Position 5**: E ✅

So, we have: `_ L I T E`

Now we need a word that fits `_LITE`, where the first letter is **not** S, C, R, M, or P.

### Possible candidates:

- **ELITE** ✅

- **BLITE** (a rare plant term, acceptable in some wordlists)

- **FLITE** (rare British word for a quarrel, accepted sometimes)

But the most common and reasonable guess is:

### ✅ **ELITE**

Try guessing **ELITE**.

The key difference here is my original prompt instructed the LLM to return a single 5-letter word only, but the prompt I gave ChatGPT didn't specify that. I designed the original prompt that way to be able to parse the response programmatically. This test showed the LLM needs to work through an answer via output tokens.

This result led me to change the prompt in my program to instruct the LLM to reason about the answer before ending its output with a specific format:

[...more prompt]

At the end, output your final guess in this format:

Final Answer: <5-letter word>

You may include as much reasoning as you like, but the end of your response must be in the above format. Nothing

should be after the "Final Answer: <5-letter word>" line.

Example response:

\```

<other reasoning...>

Final Answer: CHARM

\```

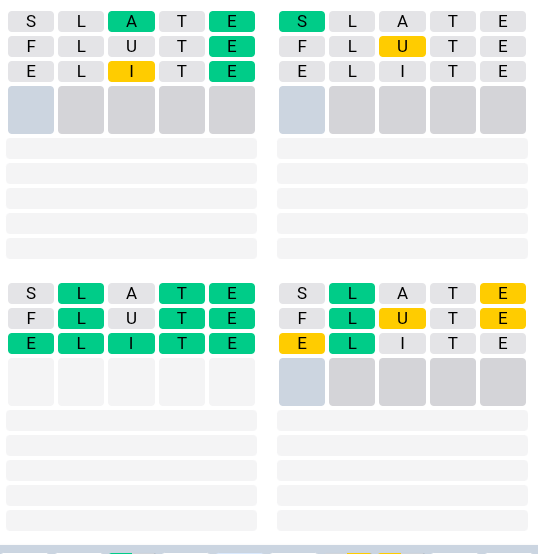

This allows the LLM to reason as much as it wants while still allowing me to parse the final answer using a simple string search afterwards. Lo and behold, it finally started getting some words right:

Unfortunately, it became super slow due to the drastic increase in output tokens.

First Taste of Success

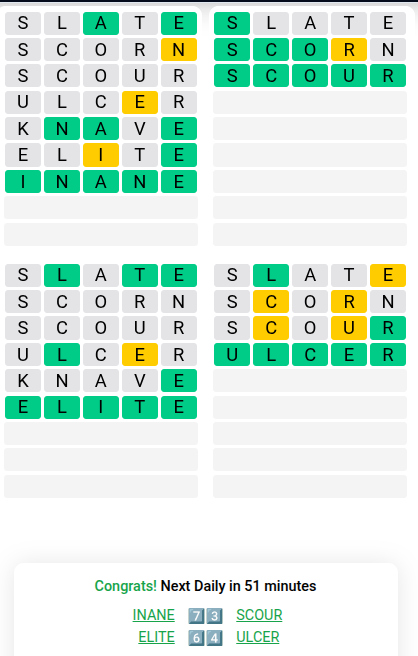

After seeing the improvement by allowing the LLM to reason, I upgraded the model again to o4-mini, which is one of OpenAI's "reasoning" models. This change resulted in my program's first official Quordle win!

However, it wasn't very reproducible—I think it got sort of lucky that first time.

I also found it was inconsistent with returning the Final Answer: <5-letter word> format I requested. I was getting frequent errors when parsing the chat completions. I switched to using structured JSON outputs to eliminate those. This feature is only available on the newer OpenAI models. It allows you to specify a JSON schema that the chat completion is guaranteed to adhere to.

// Definitions

val schemaJson = JsonObject(mapOf(

"type" to JsonPrimitive("object"),

"properties" to JsonObject(mapOf(

"reasoning" to JsonObject(mapOf(

"type" to JsonPrimitive("string"),

"description" to JsonPrimitive("Chain of thought reasoning leading to the final answer")

)),

"final_answer" to JsonObject(mapOf(

"type" to JsonPrimitive("string"),

"description" to JsonPrimitive("The final 5-letter word guess, in all uppercase")

))

)),

"additionalProperties" to JsonPrimitive(false),

"required" to JsonArray(

listOf(

JsonPrimitive("reasoning"),

JsonPrimitive("final_answer")

)

)

))

val responseSchema = JsonSchema(

name = "quordle_guess_response",

schema = schemaJson,

strict = true

)

val chatCompletionRequest = ChatCompletionRequest(

model = modelId,

messages = allMessages,

responseFormat = jsonSchema(responseSchema)

)

// Usage example:

val response = Json.decodeFromString<QuordleGuessResponse>(jsonString)

val finalAnswer = response.finalAnswer.trim().uppercase()

After switching to structured outputs, the parsing errors were completely eliminated.

Final Tweaks

It was still taking a looooong time to get responses from the model, especially on later guesses. I had a request timeout of 180 seconds, but it was exceeding that regularly. The fact that the later guesses took longer made me think it would benefit from reading all of it's previous responses / reasoning. I know it's common practice to do this, but I figured since the whole game state could be encoded in a single message, there wasn't any need to pass the previous messages.

Once I started sending the message history to the model on each subsequent guess, it started answering quicker. It could now use the previous reasoning to come to a conclusion faster.

I also changed the game state representation one more time. I encoded both the complete word, AND the individual letters with their corresponding result in a more condensed format:

Current Game State:

Attempts: 1 / 9

Board 1, Solved: false, Guess Results:

SOARE => S ✕, O ✕, A ↔, R ✕, E ✕

Board 2, Solved: false, Guess Results:

SOARE => S ✕, O ✕, A ✕, R ✕, E ↔

Board 3, Solved: false, Guess Results:

SOARE => S ✕, O ✕, A ✕, R ↔, E ↔

Board 4, Solved: false, Guess Results:

SOARE => S ✕, O ✕, A ✓, R ↔, E ✓

These two changes really improved the success rate of the program.

Reflections

Some things I learned as a result of this project:

- Chat-based LLMs don't actually "think" behind the scenes. The way they mimic thinking is by producing output tokens. Although the newer class of reasoning models do this implicitly and return summaries of their own outputs.

- Similarly, an LLM isn't really reading the words, punctuation, and formatting you're sending it—the way a human would. All it's consuming is a sequence of numbers. The way information is encoded and then tokenized can be critical for certain use cases.

- Sending previous chat messages helps LLMs when performing multi-step tasks that require reasoning and deduction. The context speeds up their responses.

Early on, I really thought I'd discovered a task that AI was actually not good at. But after a bit of "prompt engineering", I managed to build a reliable and successful automation for this complex word puzzle game.

Check it out here, and thanks for reading!